1. Introduction and Goals

The functional requirements that the application will have:

-

The system will have at least one web frontend that will be deployed and access will be through the Web.

-

Users will be able to register in the system and consult the history of their participation in the system:

-

Number of games

-

Correct/Failed

-

Questions

-

Times

-

-

The system will allow access to user information and questions generated through an API.

-

The questions must be answered within a certain period of time.

-

Each question will have one correct answer and several incorrect or distracting answers.

-

Both the correct and incorrect answers will be generated automatically.

-

The questions will be generated automatically from Wikidata data.

1.1. Quality Goals

| Quality goal | Motivation | Concrete Scenario | Priority |

|---|---|---|---|

Usability |

Good User Experience(UX) will provide us any posibility to be competitive in the market |

We aim to achieve a beautiful, user-friendly graphical interface that is clear and easy to learn, in concrete, we look for 90% of the users to be able to use the application without any help |

High |

Performance efficiency |

Efficiency problems cause a very negative UX |

We aim to achieve a response time of less than 3 seconds for the new question to be displayed |

High |

Maintainability |

We hope that there will be changes to the project, or that the game modes will be expanded |

It should be easy to add new game modes and incorporate new question categories and question templates. |

Medium |

1.2. Stakeholders

| Role/Name | Contact | Expectations |

|---|---|---|

Students |

Manuel González Santos, Noel Expósito Espina,Yago Fernández López, Manuel de la Uz González, Rubén Fernández Valdés, Javier Monteserín Rodríguez |

Passing the subject as the main objective, and creating a project we can be proud of |

Teachers |

José Emilio Labra Gayo |

To ensure that we learn the contents of the subject, especially that we learn to work as a team |

Users |

Any user of the application |

That the application is easy to use and that it is fun to use |

WikiData Community |

Wikimedia Deutschland |

They are interested in people using their services and new developers can discover it thanks to this proyect. |

RTVE |

RTVE |

This project can serve as some type of publicity, with the possibility of people getting interested in the actual show through the game. |

2. Architecture Constraints

| Constraint | Explication |

|---|---|

Question generated from Wikidata data |

Get the questions from the Wikidata data, generated automatically. |

The application is accesible from a web browser |

The application must be accesible from a frontend deployed on a webpage. |

The application offers a way to be monitored |

The application must offer a way for the sysadmins to monitor the application. |

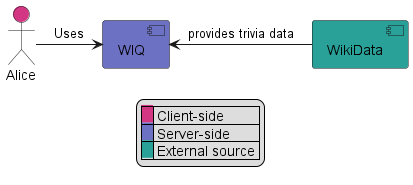

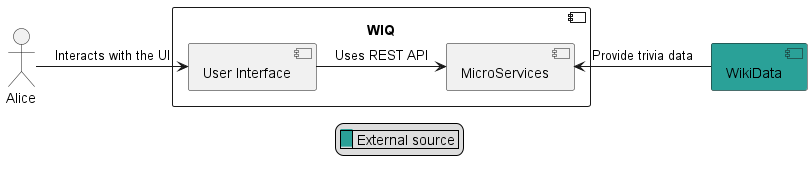

3. System Scope and Context

3.1. Business Context

Element |

Description |

User |

Plays the game through his device |

WIQ |

The application that runs the game |

Wikidata API |

WIQ takes information from Wikidata in order to form the questions and its answers. |

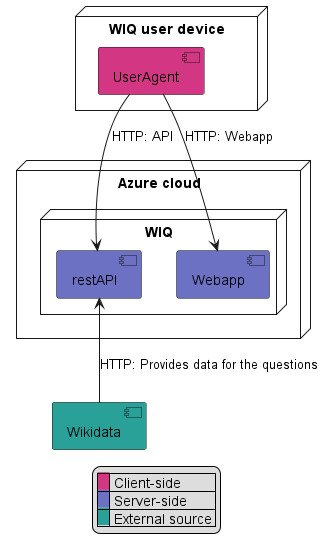

3.2. Technical Context

Element |

Input |

Output |

User Agent |

User inputs |

Requests to the RestAPI |

User Agent |

User requests page |

Requests to the webapp |

WebApp |

Requests to the webapp |

The webpage |

RestAPI |

User agent requests |

Application response |

Wikidata |

Queries |

Information relative to the questions |

4. Solution Strategy

4.1. Technologies used

The following technologies have been used to develop this project:

-

JavaScript is a high-level, interpreted programming language primarily used for building dynamic and interactive web applications.

-

React is a JavaScript framework for building user interfaces for web applications.

-

MariaDB is an open-source relational database managemente system. It offers features such as ACID compliance and compatibility with MySQL.

-

MongoDB is an open-source NoSQL database management system. It offers features such as high availability, horizontal scalability, and schema flexibility thanks to its document-based data model in JSON format.

-

Docker is a platform and tool that allows developers to build, package, distribute, and run applications within lightweight, portable containers that encapsulate everything needed to run an application.

-

Tailwind CSS is a highly customizable CSS framework that provides a set of low-level utility classes that can be directly applied to HTML elements to style them.

-

Material UI is a library that implements the Material Design from Google.

-

Testing react library is a library for testing React components.

-

React-router-dom offers easy configuration for application routes on the client side. It is only needed to establish the route and its associated component.

4.1.1. Why we have chosen this technologies

-

We went with JavaScript as our programming language because it is very widespread, which makes it very easy to search information about it. Also, we have already worked with it in the past, so we have some base knowledge about it.

-

React is a very popular framework at the moment, you can find information about it everywhere. React makes building web user interfaces much easier, using reusable components and refreshing instantly when changes are made.

-

We chose MariaDB as our RDMS for its similarity with MySQL, a language that is familiar to everyone on the team. MariaDB offers an API that allows tables to be made and managed without having to deal with sql files, simplifying the process.

-

Docker is a very popular tool for containerization, which allows us to have a consistent environment for the project. It provides standarized versions without having to install programs and automatic deployment.

-

Tailwind makes the process of giving style to the application much more flexible, making it simpler and quicker.

-

Material UI allows us to save time when designing the inferfaces that the user will be using.

-

In order to encourage better test practises, we included Testing react library.

-

React-router-dom as mentioned before, makes the process of building routes much easier. Also, when the user changes route, the virtual dom itself renders the new component.

4.2. Project structuring

The application is organised into microservices, making the different components that form the project more isolated and easier to maintain, having modules that have less coupling between them, making them more independent.

4.3. Decissions made to achieve quality goals

-

Usability: To improve usability, we decided to test de app and with the results of these we modified the color palette and the arrangement of some elements according to the experience of the users of the tests.

-

Performance efficiency: To improve performance we decided to implement a cache that stores 1000 questions and every 24 h updates the 100 oldest. By this way we always have questions available and it is not necessary to wait for wikidata to give us an answer.

-

Maintainability: For maintainability, we decided to design a system that facilitates the creation of new question templates, so that adding a new template will automatically incorporate it into the application. There is a guide on how to do this in the question_service.

4.4. Work methodology decissions

-

This project will be developed entirely in English, as it is the most common language in programming and we are more used to using it on a daily basis while doing other projects.

-

We use a Kanban project in Github to quickly see the current state of the project and see what everyone is working on and what there is to do.

-

Communication between team members is mostly made through Github, using issues to create and assign tasks, Whatsapp, to ask the rest of the team for help if needed for quick advice, and meetings.

-

The work methodology is based on the Agile methodology, specifically we will use SCRUM, which will allow us to divide the work equitably and have constant development. In addition, we have used it previously and we are accustomed to its use.

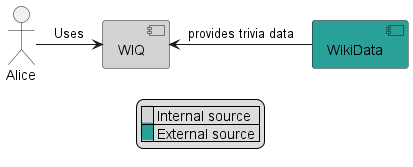

5. Building block view

5.1. Level 1: Whitebox of the Overall System

- Motivation

-

WIQ application has the basic structure to generate questions from WikiData and ask them to the user.

- Contained Building Blocks

| Name | Description |

|---|---|

User |

User of the application which will interact with it. |

WIQ |

The system to be implemented, it will take the necessary information from WikiData to generate questions. |

WikiData |

Has the necessary information to generate generic trivia questions for the users. |

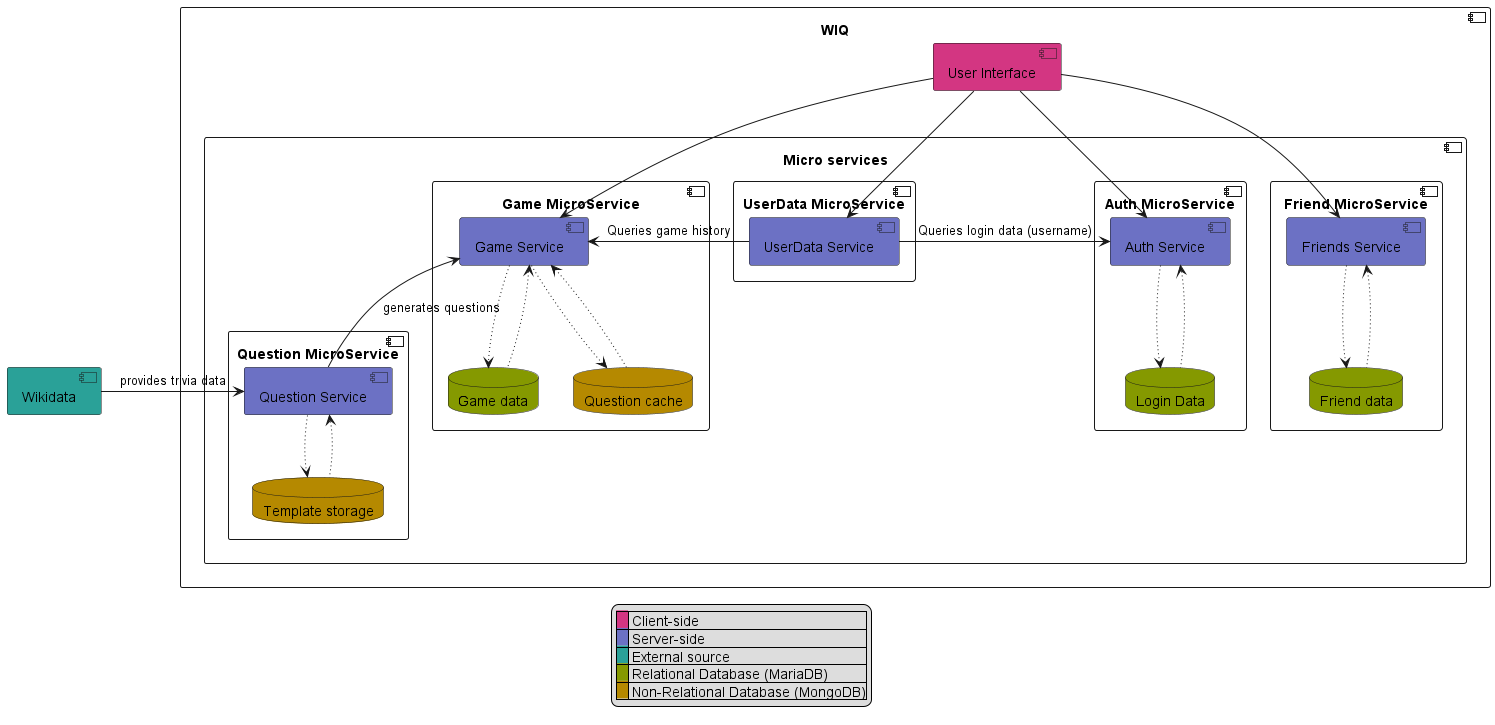

5.2. Level 2

- Motivation

-

This shows the main data flow of the application (Towards the MicroServices) and also the architectural decision to use microservices to which the UI will hook up to.

- Contained Building Blocks

| Name | Description |

|---|---|

User Interface |

Provies an interface that the end user of the application can use. |

MicroServices |

They provide a backend for the UI, while keeping the app modular and easy to distribute and update. |

5.3. Level 3

- Motivation

-

In this diagram we can see the decided microservices which will provide all the necessary operations for the application to work as intendeed. This is for now a WIP diagram which will evolve as the project progresses.

- Contained Building Blocks

| Name | Description |

|---|---|

Game Service |

It is the microservice that will deal with game creation maintance and ending, it will record all games and the score of the user. |

UserData Service |

Its a microservice that provides to the UI all the necessary data to show the user statistics such as average score and game history. |

Auth Service |

Its a microservice that users can use to log onto the application, this works via Token authentication so that it works between microservices. |

Question service |

Its main purpose is to be an abstraction over the WikiData API so the other microservices can directly ask him for questions instead of having to deal with the WikiData API |

Game data and Login data |

They are the main databases of the aplication and together will store all the important persistant data of the aplication (Hence why MariaDB was choosen to maximise data consistency). |

Template storage and Question cache |

They are secondary storages of the application whoose deletion is not critical to the application, template storage stores the templates that are used to generate the questions and question cache stores a temporary amount of questions to reduce latency. MongoDB was choosen due to its performance. |

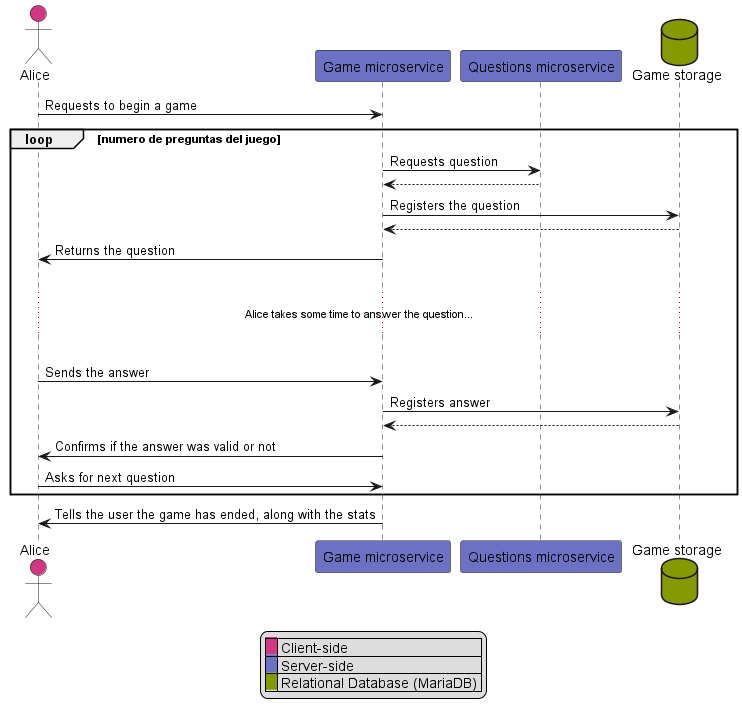

6. Runtime View

6.1. User plays a questions game

When the users starts a game, it does so via the game service (which internally will use the questions service). The game service will provide questions until the end of the game.

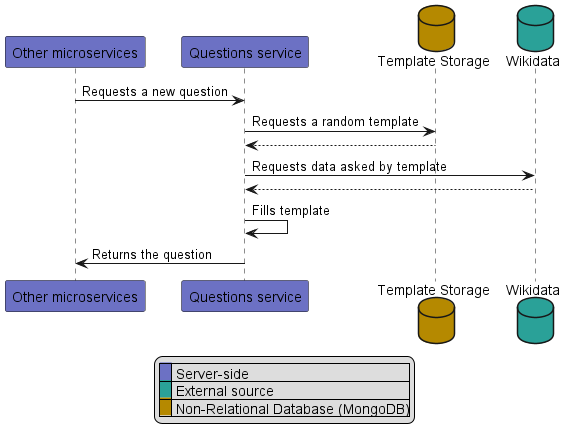

6.2. A new question is generated

Other services can ask the questions service to generate a question, this serves as an abstraction over the WikiData API as we are only interested in the questions and not the raw data.

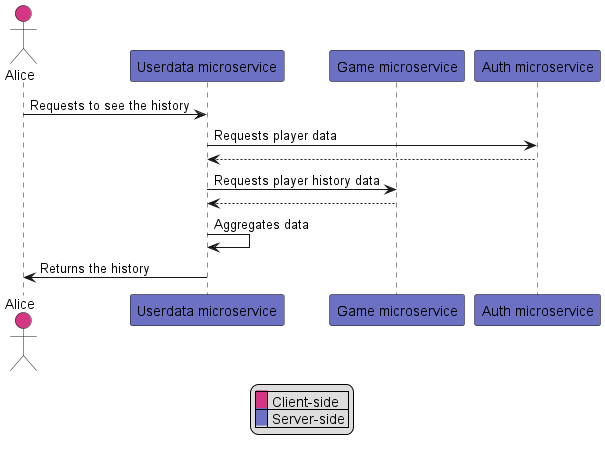

6.3. User looks at his history

The user can look at his history via the User data microservice which provices access to all of the data of the user.

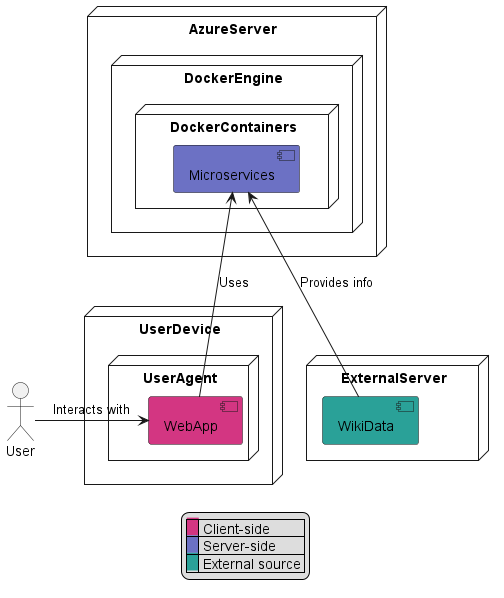

7. Deployment View

7.1. Infrastructure Level 1

- Motivation

-

While developing and testing the app it will be run on the developer’s localhost enviroment (Using docker for standarization). However, in the production stage we aim for the aplication to be running on a Azure Server. We hope for a high avaiability of the aplication (95% would suffice for our needs).

- Quality and/or Performance Features

-

We have not tested the application performance so we can’t analyze it yet

- Mapping of Building Blocks to Infrastructure

| Element | Description |

|---|---|

Azure Server |

Where the application will be deployed. |

Docker Engine |

Daemon inside the azure server which will run our docker containers |

WebApp |

It is the frontend of our application. |

MicroServices |

They create the backend of the application, they manage every aspect of the application. |

WikiData |

It is an external service which will provide the necessary data for the application to work properly. |

8. Cross-cutting Concepts

8.1. Microservices architecture

We have decided that our architecture will be based on microservices, which means that every part of the aplication will be divided in a service that performs a specific function. The services can communicate witch eachother using their repective APIs or via the shared database

8.2. User Experience

The application will consist of a website where the user will be able to play our WIQ game, however as we have not started coding yet we do not have a clear idea of how it will look or if we are adding extra functionality to the webpage.

8.3. Session handling

The application will include a system to control the user access to keep track of their games (And other data), this system will work be based on the token pattern rather than the session pattern. For now we have decided to use JsonWebToken for the token generation and validation

8.4. Persistence

The persistence between the many microservices of the application will be dealt with using a SQL relational database (Specifically MariaDB) which will help to communicate and sync the different microservices

8.5. Security & Safety

To ensure customer safety we will store their password using a password safe hash such as bCrypt

8.6. Testing

In order to avoid errors in our aplication we will include a bunch of tests that cover both the frontend and backend areas. (With special attention to e2e tests, unit tests and integration tests)

8.7. Internationalization

At this point of the proyect we are not sure if we will implement Internationalization, it’s a decision that we will take as the proyect progresses.

9. Architecture Decisions

We have added the ADRs to the Wiki section in the GitHub page, so we provide the links to them:

-

ADR 01 → JavaScript

-

ADR 02 → React

-

ADR 03 → MariaDB

-

ADR 04 → Docker

-

ADR 05 → Tailwind-CSS

-

ADR 06 → Material UI

-

ADR 07 → Testing react library

-

ADR 08 → React-router-dom

-

ADR 09 → Sequelize ORM

-

ADR 10 → MongoDB

-

ADR 11 → Question Cache

-

ADR 12 → Question Templates

-

ADR 13 → Prometheus

-

ADR 14 → Grafana dashboard

-

ADR 15 → Data migration

-

ADR 16 → i18next

-

ADR 17 → CodeScene

10. Quality Requirements

10.1. Quality Tree

| Quality Category | Quality | Description | Scenario |

|---|---|---|---|

Usability |

Easy to use |

Ease of use by the user when playing games or moving around the application. |

SC1 |

__ |

Easy to learn |

Game modes should be intuitive. |

__ |

Maintainability |

Robustness |

The application must be able to respond to user requests first. |

SC2 |

__ |

Persistence |

There will be no partial loss of user information and data. |

__ |

Performance efficiency |

Response time |

The application should not exceed 3 seconds of waiting time. |

SC3 |

Security |

Integrity |

User data must be kept confidential and secure at all times. |

__ |

Cultural and Regional |

Multi-language |

The application texts must be displayed in English. |

SC4 |

10.2. Quality Scenarios

| Id | Scenario |

|---|---|

SC1 |

A new user registers in the application and can start playing without the need to view a user guide. |

SC2 |

A user performs an action in the application that results in an internal error, but the user can still use the application normally. |

SC3 |

A playing user will be viewing the different questions with little or no waiting time. |

SC4 |

An English-speaking user will be able to use and play with the application without any problems. |

11. Risks and Technical Debts

11.1. Technical risks

| Risk | Description | Possible measures |

|---|---|---|

Slow to display questions |

In case of having a large number of users using the application at the same time, our API can generate a bottleneck when generating requests to Wikidata. |

Use a container orchestrator such as Kubernetes to replicate our API and thus balance the load of requests. Another possible solution would be to store the queries requested to Wikidata for later use without having to make the request at that instant. |

Obsolescence of questions |

In case of storing the requested questions to Wikidata it is possible that some of them may become obsolete. |

A separate microservice could be made whose purpose is to update the answers to the questions from time to time. |

11.2. Commercial or domain risks

| Risk | Description | Possible measures |

|---|---|---|

Wikidata could limit the use of its API |

In case of having a large number of users using the application at the same time, Wikidata itself may limit the number of requests or even ban us from using it. |

Store queries requested to Wikidata for later use without the need to make the request at the time. |

12. Testing Report

12.1. Unit Tests

Each component has its own unit tests that verify its functionality acts correctly, thus providing security and robustness to the project.

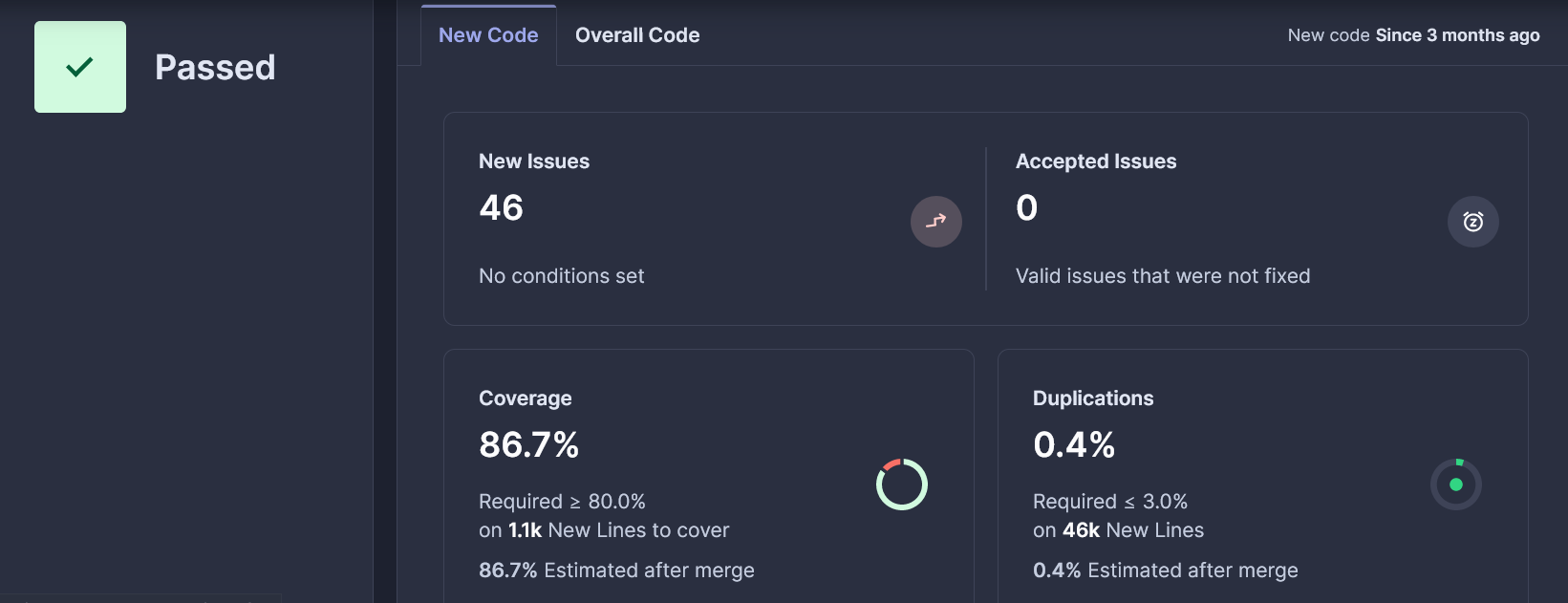

12.1.1. Code coverage result

We use the Sonar tool to ensure that the code is well covered by tests, so that if there is less than 80% of uncovered code or if there is more than 3% of code duplications, it raises an error and we cannot integrate our changes until we have the minimum coverage

It was challenging for us to maintain the code coverage at 80%, either due to our lack of experience with this tool or because it didn’t function correctly. Some tests have decreased the coverage, while others have inflated it beyond what accurately reflects the security of the code. Nonetheless, it has helped us to develop numerous unit tests and make the project very robust.

12.2. Acceptance Tests

With acceptance tests, we aim to automatically verify that our system meets the requirements, confirm the integrity of our system with Wikidata, and detect any unexpected issues that may arise unnoticed.

-

We focus our efforts on testing the following features

-

Registering a new User

-

Playing a game

-

Playing game different modes

-

12.3. Load tests

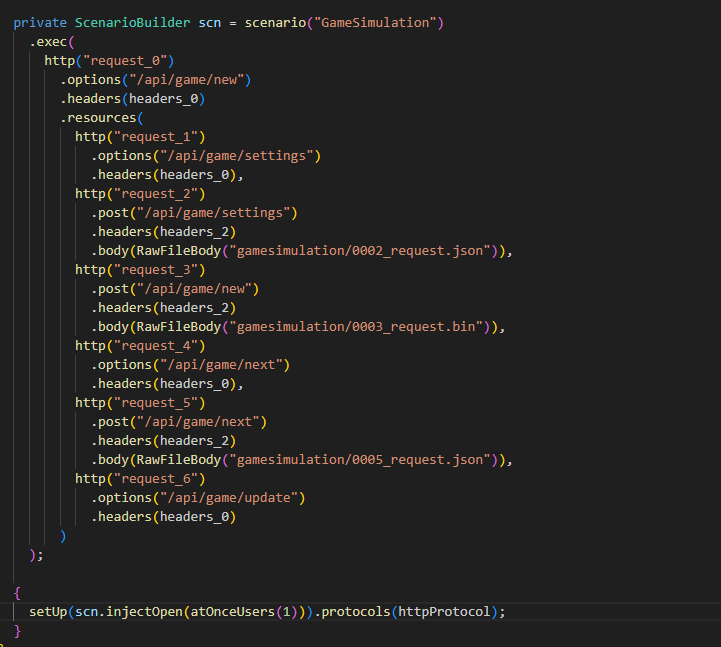

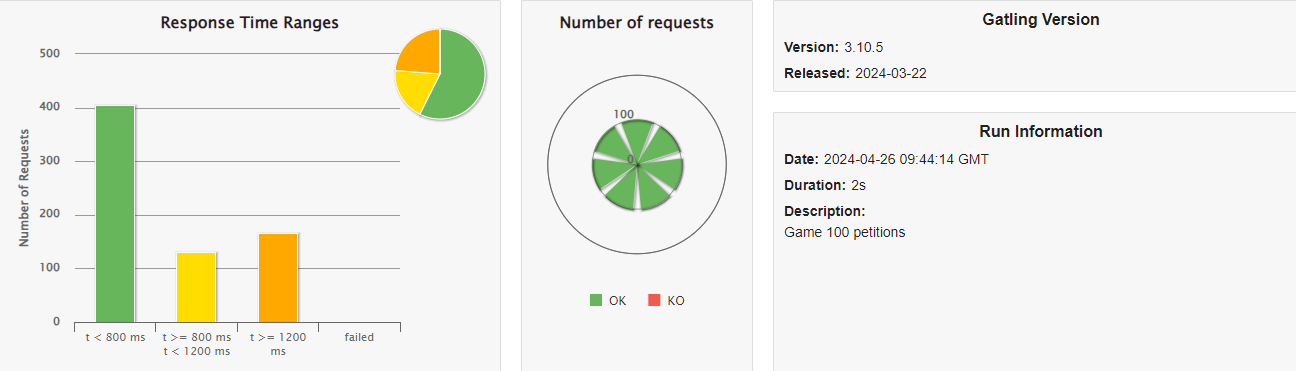

Load tests measure performance in advance of normal load or peak load. To carry out these tests we have used the tool Gatling, which allows to record an example of use and change the amount of load on it.

As one of our quality goals is performance efficiency, we have focused our tests on measuring the performance of our question cache, which we explain in our ADR: ADR 11.

The first step was recording the start of a normal game to see how it loads the question. We will add all the results we got from Gatling in a folder in the documentation section, in case you want to consult it in depth.

This is part of the code that it generated:

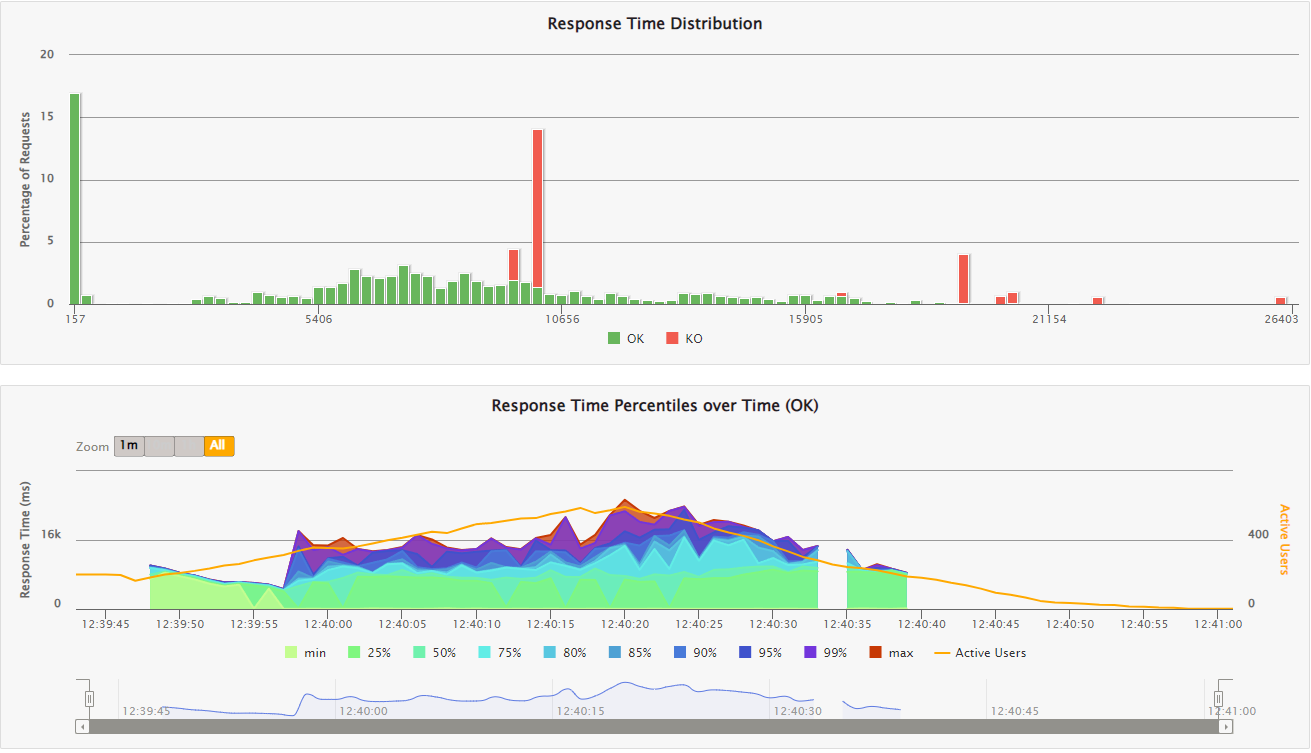

As we are quite confident in our cache, we will start with 100 current petitions:

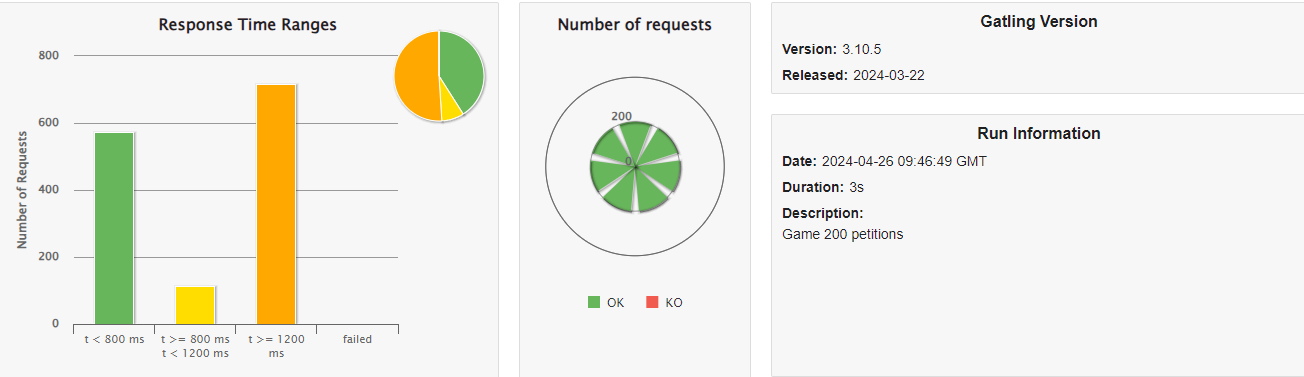

As you can see in 2 seconds we can manage 100 petitions without failing any requests. The same happens with 200 petitions which only takes 3 seconds.

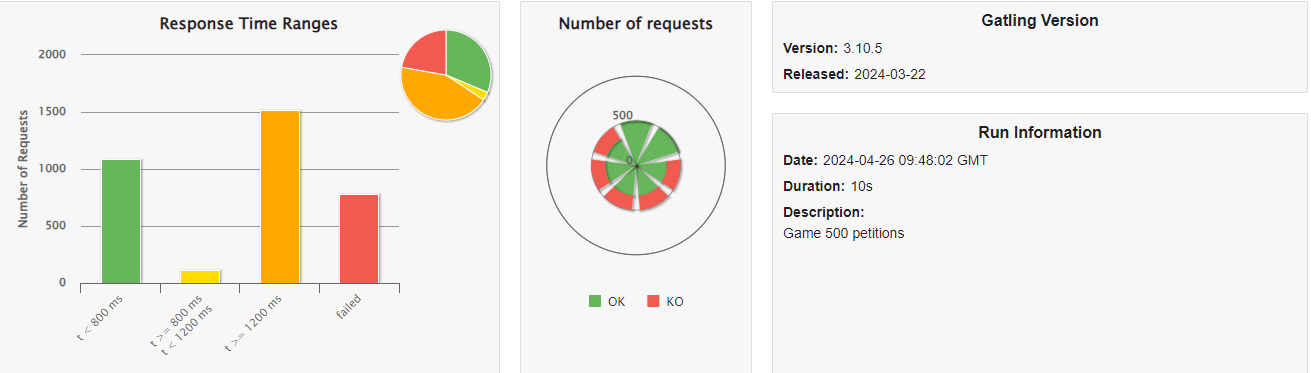

The problems start around the 500 petitions where some requests start fail due to time out exceptions, however around 80% of the request finish correctly and it only takes 10 seconds to finish.

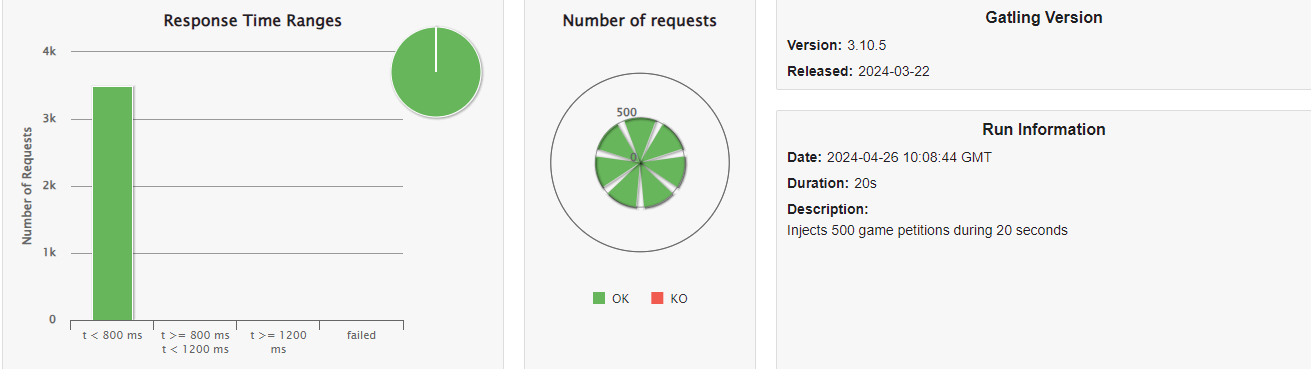

We consider that been able to put up with more than 200 petitions at the same time is a quite good result. We have made more load test, for example load 500 petitions distributed in 20 seconds, which has great results.

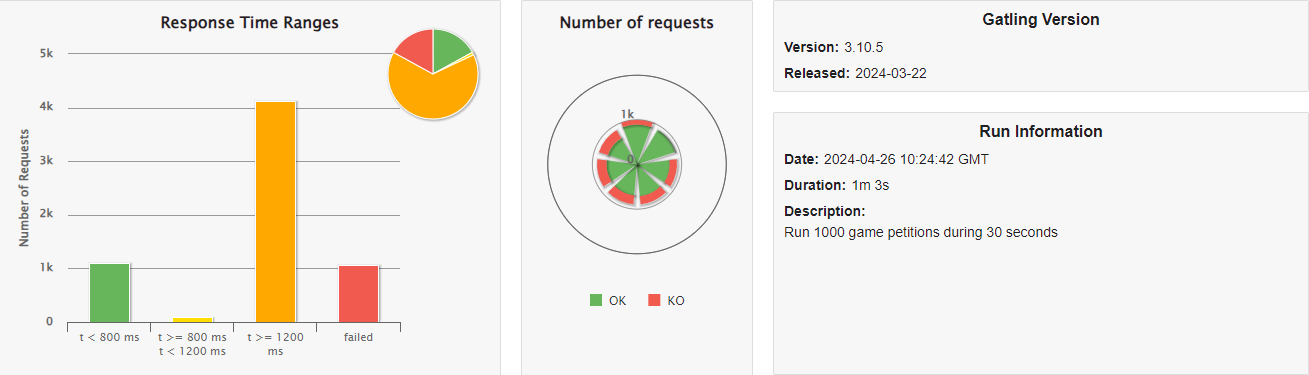

And also load 1000 petitions during 30 seconds which was pretty fast, however some of the requests failed.

And to finish with those related to the cache, we have included int the code some of the options that Gatling provides and mixed them.

The code for the las simulation is atOnceUsers(200),nothingFor(5),rampUsers(500).during(20),constantUsersPerSec(30).during(15).randomized().

Which at first loads 200 petitions at once, then waits for 5 seconds, loads 500 petitions distributed in 20 seconds and finally loads 30 petitions constant during 15 seconds at a randomized interval.

In this graphic we can analyze that the problem with this tests is when the 500 distributed petitions and the 30 constant petitions.

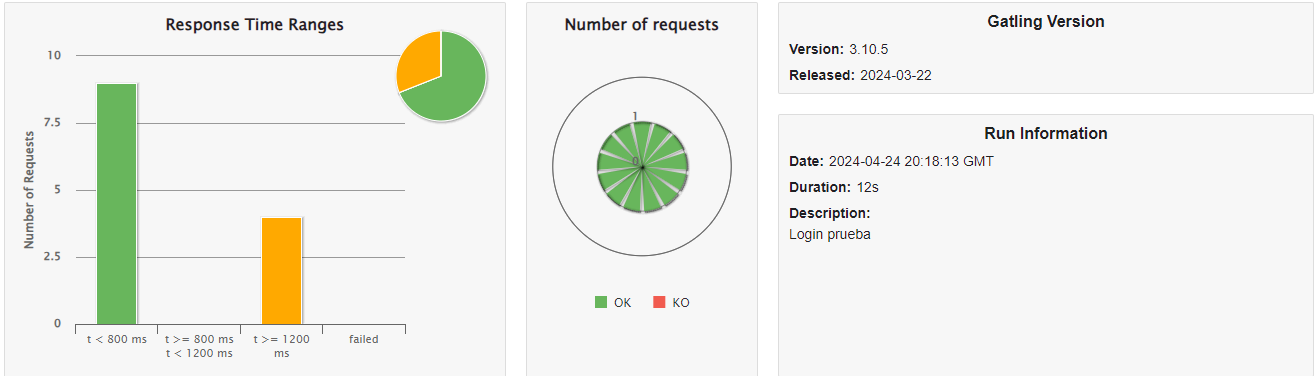

The other part that we have considered important to measure is the time which take an user to login, we have made this process slow because it helps to avoid brute force attacks. As you can see in the test a single user takes 12 seconds which is more than 500 petitions to the game service.

12.4. Usability tests

We have consider that usability is one of the most important aspects in a game because people external to the project can see problems or design mistakes that we may overlook. For that reason we have consider advisable to make usability tests.

As we had to develop this project in such a short time we didn’t have time to make proper usability test, for that reason we will take as a reference the chapter 9 of the book "Don’t Make Me Think" ,written by Steve Krug, named "Usability testing on 10 cents a day".

We have tested 6 different users which we have organized in three groups (2 per group) : the ones which also study our degree, but are in another projects; the ones who have high computer expertise because they study something related to technology and the ones who hardly use a computer.

Before the test we gave the each participant a brief explanation about the game, but without going into much detail because we wanted them to discover the functionality of the game.

We have summed up the main negative aspects that each user stood out, however in general they liked the application and enjoyed playing, and group them in the groups we have explained before.

12.4.1. Group 1: Study our degree

-

Don’t understand what tags stands for.

-

You can enter the game configuration during the game, so it should show a pop up informing that you will end the game you are playing.

-

When you try to sign off it should show a confirmation message.

-

Can be a good idea not to allow internationalize while you are playing the game.

-

A lot of unused space on the sides of the screen.

12.4.2. Group 2: High computer expertise

-

Don’t understand what tags stands for.

-

A lot of unused space on the sides of the screen.

-

Implement help in the questions, for example rule out one of the fake questions.

-

Don’t like that the ranking shows their scores to the other players without consent.

12.4.3. Group 3: Hardly use a computer

-

Don’t understand what tags stands for.

-

Very difficult questions.

-

Too many sport theme questions.

-

Music in the game would be a plus.

We will try to keep in mind those aspects in the development of our app, however as we are at the end of the project and we don’t have many time, we will try to fix and implement those which we consider required or important.

13. Glossary

| Term | Definition |

|---|---|

Wikidata |

Collaborative platform for storing structured data, supporting multilingual information and facilitating connections between different pieces of knowledge. |

Kanban |

Work methodology focused on limiting work in progress, managing flow and continuosly improving processes. |

Microservices |

Small, independent services that handle specific business functions. Each one operates independently. |

API |

Application Programming Interface is a set of commands, functions, protocols, and objects that programmers use to create software or interact with an external system. |

Agile Methodology |

A set of principles, defined in the agile manifesto, for software development under which requirements and solutions evolve through the collaborative effort of self-organizing cross-functional teams. |

SCRUM |

A framework for developing which implements the Agile Principles, delivering, and sustaining complex products, with an initial emphasis on software development. |